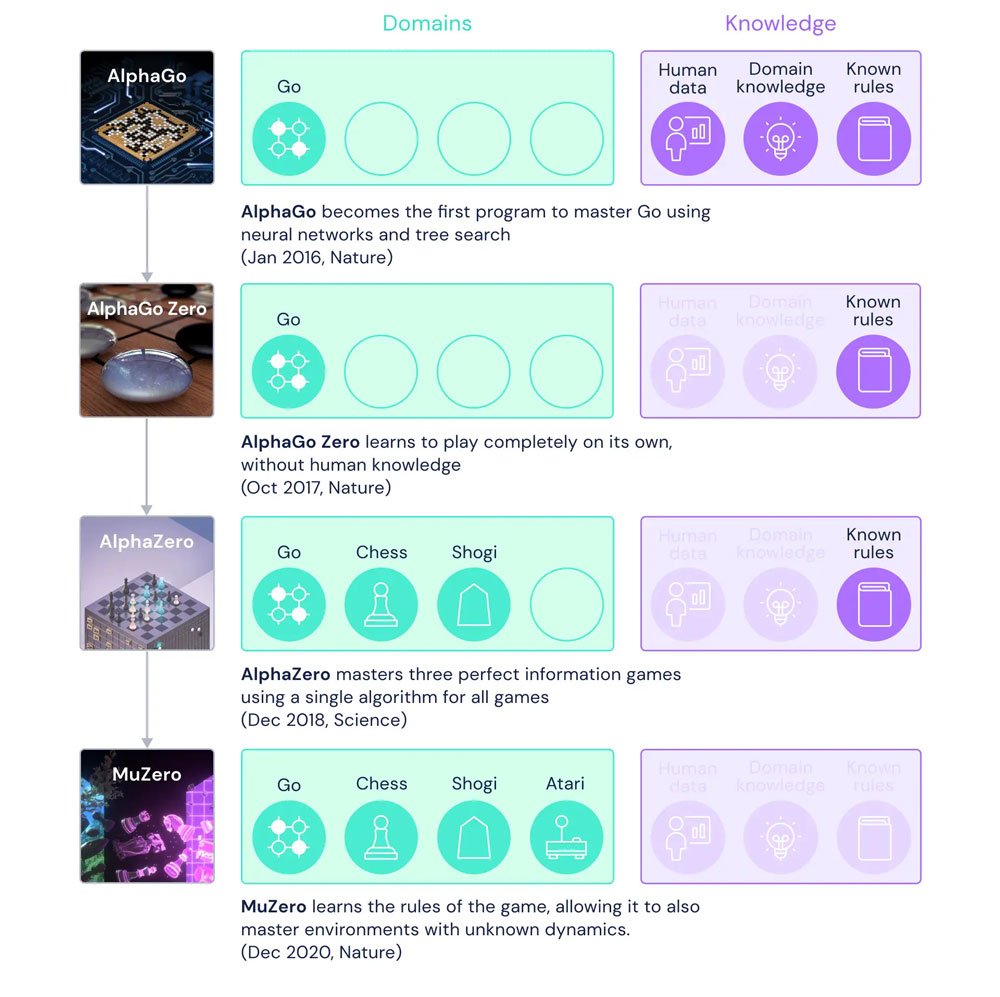

Game Over has been displayed more and more often in recent weeks for the scientists who have been testing Deepmind’s latest artificial intelligence. After Alphago and Alphazero, which have long since defeated humans in chess and Go, the Google subsidiary is going one step further – after board games, it is now the turn of video games.

More and more often in 2020, you read about AI developments that played strategy games like Starcraft or Dota, but the path to victory was very much dependent on human influence. The AI gamers first had to be taught how to play before they began to learn on their own.

Muzero, the latest AI from Deepmind, no longer needs its human counterparts. Similar to a gamer starting a game for the first time, the artificial intelligence tests out what is allowed and what is not by performing various actions. As with many other AI systems, learning in this case is also reward-based. What a defeated piece represents in chess are scores or high scores in the new AI test games.

While playing Miss PacMan, for example, Muzero changes its own playing style as often as necessary until it has scored as many points as possible; completely without instructions or tips from the researchers. Adapting the game principles to other games is also faster than with its predecessors. Within two to three weeks, Muzero can teach itself to play any Atari game. In doing so, the system draws on previous experience and uses only those resources and moves that are relevant to winning the game.

What sounds like a gimmick also has enormous potential for the future. The efficient handling of data and the fast reaction to changing situations can, for example, enormously improve the systems of self-driving cars or the development of new strategies or medical combinations. The independent learning and combining of data will make it possible to